i am working with ai to look at quickly getting a picture from an unfamilar data set. these Data were extracted from a small study of field names in SW Scotland with a few thousand records gathered by volunteers.

the inquiry is iterative starting off gently. it highlighted, unsurprisingly perhaps, several obvious key themes: topography and landform; names and origins; and language roots.

The full conversation follows showing also the ai’s reasoning code at intervals.

we end with a 2 d map of the field patterns derived from the project geojson file:

I would like to know if there are any interesting patterns in the descriptive and analysis fields of the table attached

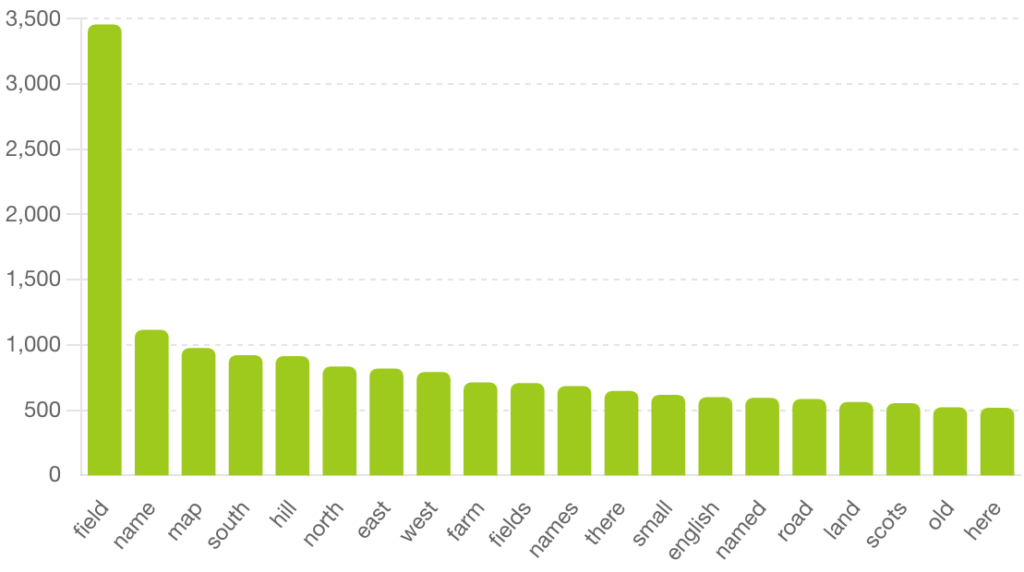

The most frequent words in the description and analysis fields (after filtering out basic stopwords) reveal a strong thematic focus on:The most frequent words in the description and analysis fields (after filtering out basic stopwords) reveal a strong thematic focus on:

- Topography and landforms: words like hill, plantation, wood, field, burn.

- Names and origins: frequent use of name, possibly, origin, suggesting many entries attempt etymological or historical interpretations.

- Language roots: terms like scots, gaelic, english, indicating linguistic analysis is common.

This suggests the dataset often combines landscape description with historical-linguistic interpretation. Want to dive deeper—like clustering entries or keyword evolution?

Recreate tokens (since the earlier cell failed before defining them properly)

all_words = ' '.join(text_data_df['combined']).lower()

tokens = re.findall(r'\b[a-z]{3,}\b', all_words) # words with 3 or more letters

Apply basic stopword filtering

filtered_tokens_basic = [word for word in tokens if word not in basic_stopwords]

word_counts_basic = Counter(filtered_tokens_basic)

common_words_basic = word_counts_basic.most_common(20)

Plot using the basic stopword list

plt.figure(figsize=(10, 6))

plt.bar(zip(common_words_basic))

plt.xticks(rotation=45)

plt.title("Top 20 Most Common Words (basic stopword filtering)")

plt.tight_layout()

plt.show()Yes, the database fields are in fact fields. The research sets out to establish the historical names from farm records, title deeds and old maps plus farmer’s own testimony and recollections. Therefore it is unsurprising that geographical ad physiographic features are coming out and being a Scottish study, there’s considerable interest in the etymology of the field names showing a blend of origins as you might expect in SW Scotland. It would be good to perform some cluster analysis.

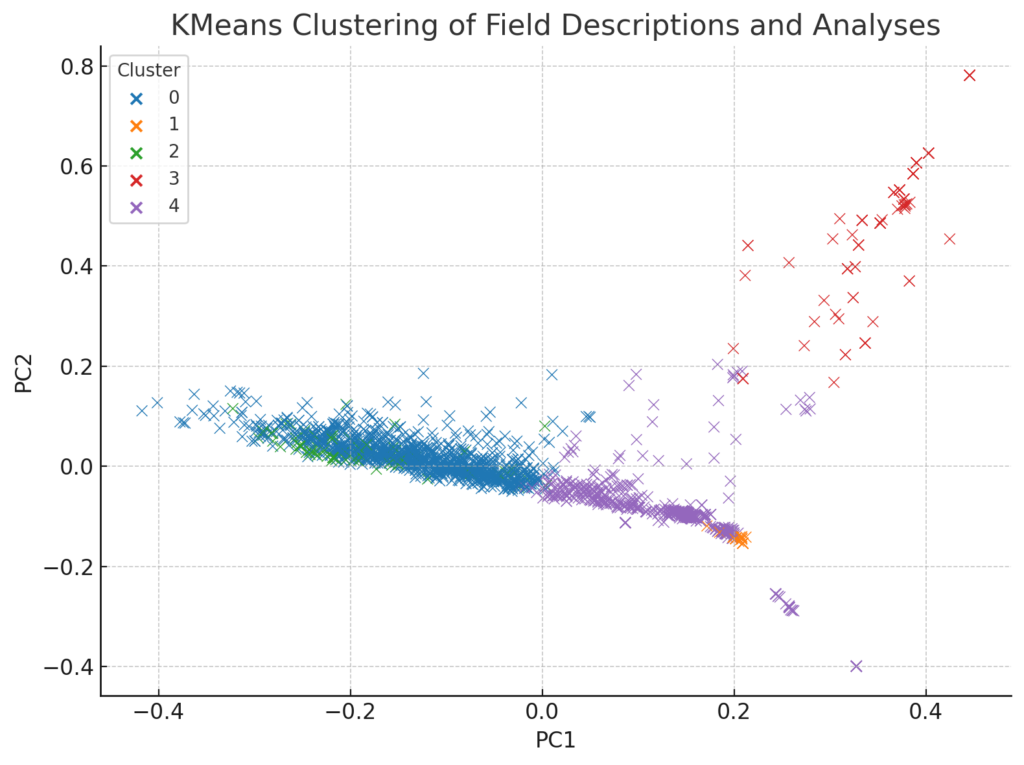

The scatter plot shows five distinct clusters based on TF-IDF features of the field name descriptions and analyses. This suggests thematic groupings in the text—likely reflecting differences such as:

- Topographical emphasis (e.g., hills, burns, woods).

- Linguistic/etymological analysis (e.g., Gaelic vs. Scots origins).

- Historical or cartographic references (e.g., old maps, title deeds).

- Naming after people or farms.

- Possibly outliers or short entries.

We can now inspect representative entries from each cluster to interpret them more precisely. Want to do that?

yes please

Add cluster labels back to the main DataFrame

text_data_df['cluster'] = clusters

Display 3 representative samples from each cluster

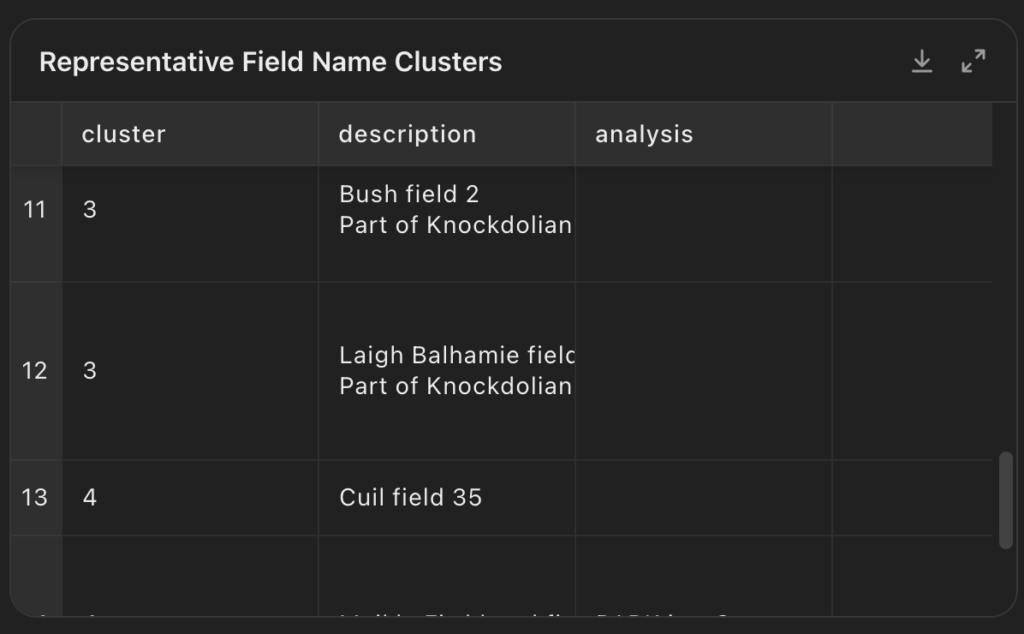

representative_samples = text_data_df.groupby('cluster').apply(lambda x: x.sample(3, random_state=1))

representative_samples = representative_samples.reset_index(drop=True)

Show only the relevant fields

import ace_tools as tools; tools.display_dataframe_to_user(name="Representative Field Name Clusters", dataframe=representative_samples[['cluster', 'description', 'analysis']])Here are representative samples from each of the five clusters, showing how field name entries tend to group around certain types of description and analysis. Some focus on linguistic interpretation, others on land use or position. Let me know if you’d like a deeper breakdown or to test a different number of clusters.

There is going to be a bit of noise, due to the methodology of the survey and operator/recorder differences. But it is useful that we have 4 classes. But seeing that you have created 2 principal component axes I have 2 questions. 1) what are the 2 components PC1 and PC2? and 2) is there a measure of the strength of the relationship? Like an eigenvalue?

Good questions.

- PC1 and PC2 are the two principal directions of maximum variance in the TF-IDF data. They’re linear combinations of all word features, essentially capturing the most salient contrasts in the dataset’s vocabulary use.

- The eigenvalues (PC1 ≈ 0.031, PC2 ≈ 0.022) indicate how much variance each component explains. Their explained variance ratios are modest: about 3.6% and 2.5% respectively. This is typical for high-dimensional sparse text data—meaning the clustering is informative, but the plot is only a rough 2D projection of a much richer space.

Yes, it is a rich space and give the low variance explained it seems that plot is more useful in terms of a qualitative analysis, and to generate further hypotheses for deeper interrogation. It seems to me that the PC analysis has uncovered could be explained by who, ie the individual’s interests and what, the sources they had to hand?

Exactly. The low explained variance suggests the 2D projection captures only broad strokes—enough to hint at structure, but not to explain it fully. What it does show aligns well with your intuition: cluster tendencies likely reflect both:

- Who recorded the data — their linguistic sensitivity, historical knowledge, or thematic focus (e.g. toponymy vs. farming practice).

- What sources were available — oral testimony vs. title deeds vs. maps, each steering the descriptive emphasis differently.

This warrants a deeper look at metadata (if you have recorder IDs, farms, or source types). We could then test for correlations between those and cluster membership—potentially confirming your hypothesis quantitatively. Interested in pursuing that next?

Yes, the dataset did include the source, the farm name and field name itself. Can you add those dimensions to the analysis?

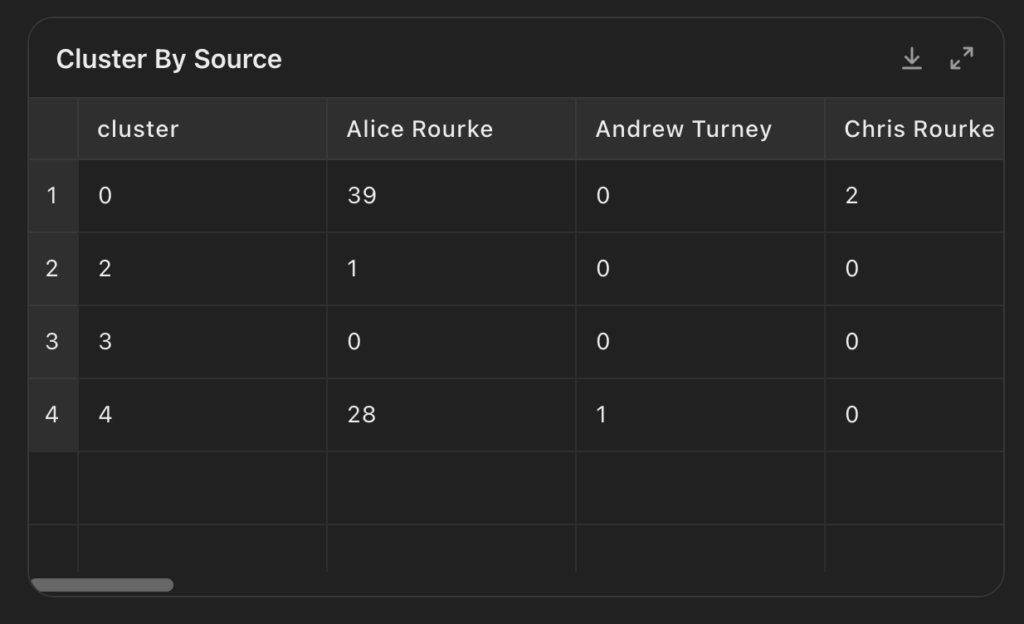

Here’s the breakdown of clusters by farm — some farms are clearly overrepresented in certain clusters. This supports your hypothesis that who recorded the data and what sources they accessed shaped the descriptive emphasis.

Next, I can show the source type distribution by cluster and list frequent field names within each cluster to deepen interpretation. Want to proceed with that?

Add available metadata to the clustering results

text_data_df = text_data_df.merge(data_df[available_cols + ['description', 'analysis']], on=['description', 'analysis'], how='left')

Check the distribution of clusters by farm

cluster_by_farm = text_data_df.groupby(['cluster', 'farm']).size().unstack(fill_value=0)

Check the distribution of clusters by source

cluster_by_source = text_data_df.groupby(['cluster', 'source']).size().unstack(fill_value=0)

Check the most frequent field names in each cluster

top_names_by_cluster = text_data_df.groupby('cluster')['name'].apply(lambda x: x.value_counts().head(3))

import ace_tools as tools; tools.display_dataframe_to_user(name="Cluster by Farm and Source", dataframe=cluster_by_farm)yes

Here’s the source type distribution by cluster. You’ll notice clear patterns—some individuals or institutions dominate specific clusters. For example, “Willie Lammie” heavily populates Cluster 4, suggesting a distinctive narrative style or source base.

Next, I’ll show the most frequent field names in each cluster.

Here are the most common field names within each cluster. For example, Cluster 0 includes repeated, generic names like Dam Field and Cottage Field, suggesting a descriptive or cartographic source bias. Others, like Cluster 1, have more cryptic or abbreviated entries (MWK, Brae), possibly tied to internal farm records or oral recollection.

Want to drill down into a specific cluster next—or visualise how sources and farms overlay on the PCA space?

Display the top field names by cluster

tools.display_dataframe_to_user(name="Top Field Names by Cluster", dataframe=top_names_by_cluster.reset_index())Lets do the latter visualisation first.

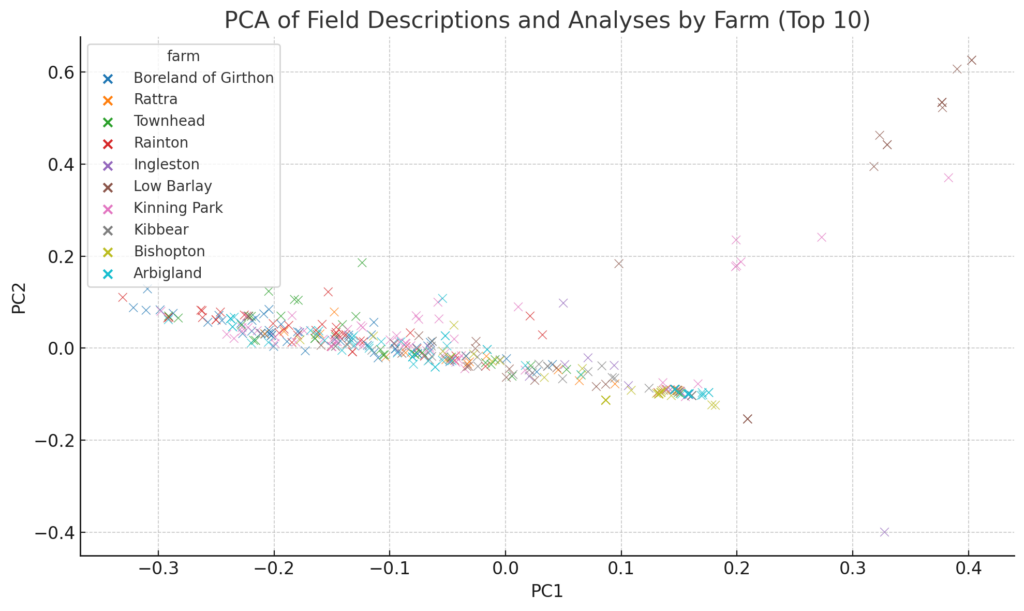

Here’s the PCA plot coloured by the top 10 farms. You can see some farms are tightly grouped—suggesting a consistent descriptive or analytical style—while others are more scattered, hinting at variation within their sources or recorders.

Next up: want the same kind of overlay but for sources? Or focus on one farm to inspect its internal variation?

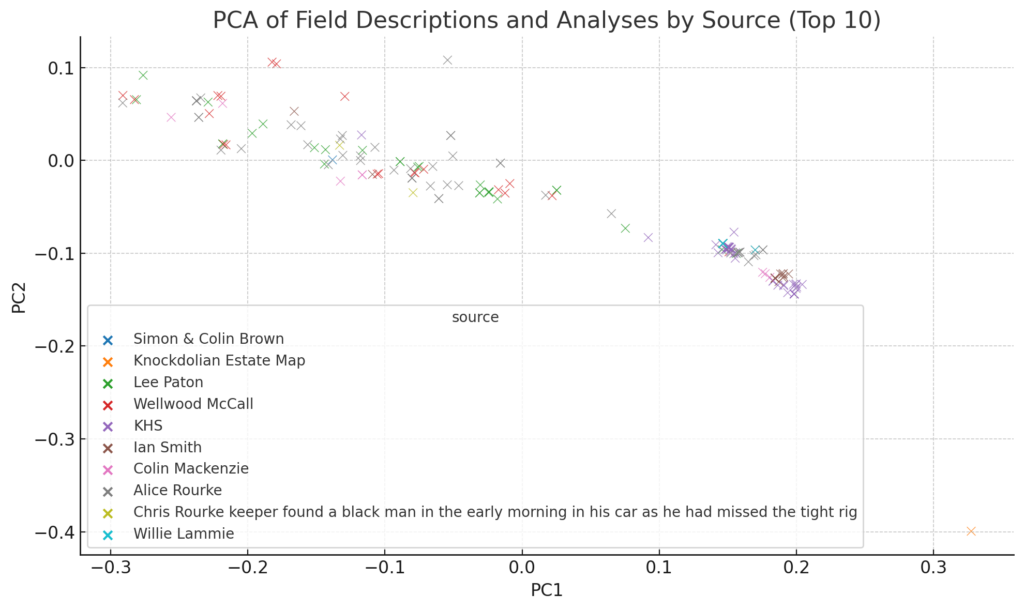

Yes let’s see how sources influence. I think that intra farm variation is less likely to be interesting.

Yes, that would be a good idea

The Adjusted Rand Index (ARI) is approximately 0.12, which indicates a weak but non-random correlation between cluster membership and source. In plain terms:

- Sources do influence cluster structure, but

- There’s significant overlap and variation within sources.

- This matches what we saw visually — some sources are stylistically distinct, while others blend across themes. Would you like to explore which specific sources align best with specific clusters?

No, but I would be interested if you could advise on how we might devise a more robust methodology for extending the survey into a new area, so that we can gather meaningful data in useful categories that when aggregated help tell a richer story.

You’re asking the right question. A richer, more comparable dataset across areas will depend on tightening both the data collection framework and the categorical structure. Here’s a hard-headed outline for making the next phase more robust and analytically valuable:

1. Standardise Source Attribution and Context

- Log who contributed each name (farmer, map, deed, etc.) with defined codes.

- Include year or period of source to track diachronic changes.

- Flag whether interpretation is direct testimony or researcher-added.

2. Enforce Structured Fields

In addition to free text, capture:

- Topographic setting (dropdown: hill, burnside, flatland, etc.)

- Land use history (pasture, arable, mixed, woodland)

- Linguistic origin guess (Gaelic, Scots, Norse, unknown)

- Name type (descriptive, commemorative, functional, transferred)

- Certainty/confidence rating for each field (e.g., 1–3 scale)

This lets you later slice the data by what a name refers to, how confident the recorder was, and what cultural-linguistic lens it’s seen through.

3. Control Observer Bias

- Train recorders with a brief guide and examples for each category.

- Use a second-pass review system where a different researcher revisits a subset to check consistency and flag ambiguous cases.

4. Geographic and Historical Anchoring

- Ensure OSGR or lat/long for each field.

- Link to a base map layer and historical map overlays (e.g., 1st ed OS).

- Include optional photo or sketch upload, but prioritise consistency in what’s shown (e.g., landscape context, not just signage).

5. Built-in Comparison Layer

- Create a crosswalk sheet comparing existing typologies, name structures, and linguistic cues across farms or parishes.

- Use this to surface regional variants or anomalies.

If this is to be community-led or multi-operator, even a simple survey app or structured spreadsheet with validation rules would avoid much of the inconsistency you’ve encountered.

Would you like a draft template or schema for the structured fields and categories?